The post Snapshots for IPC Fuzzing appeared first on Mozilla Hacks - the Web developer blog.

]]>By running potentially harmful code with lower privileges, the impact of a potential code execution vulnerability is mitigated. In order to gain full control, the attacker now needs to find a second vulnerability that allows bypassing these privilege restrictions – which is colloquially known as a “sandbox escape”.

In order to achieve a sandbox escape, an attacker essentially has two options: The first one is to directly attack the underlying operating system from within the compromised content process. Since every process needs to interact with the operating system for various tasks, an attacker can focus on finding bugs in these interfaces to elevate privileges.

Since we have already deployed changes to Firefox that severely limit the OS interfaces exposed to low-privilege processes, the second attack option becomes more interesting: Exploiting bugs in privileged IPC endpoints. Since low privilege content processes need to interact with the privileged parent process, the parent needs to expose certain interfaces.

If these interfaces do not perform the necessary security checks or contain memory safety errors, the content process might be able to exploit them and perform actions with higher privileges, possibly leading to an entire parent process takeover.

Traditionally , fuzzing has had multiple success stories in the history of Mozilla and allowed us to find all sorts of problems including security vulnerabilities in our code. However, applying fuzzing to our critical IPC interfaces has historically always been difficult. This is primarily because IPC interfaces cannot be tested in isolation, i.e. require the full browser for testing, and because incorrect usage of IPC interfaces can force browser restarts which introduce a prohibitive amount of latency between iterations.

To find a solution to this challenge, we engaged with the research community to apply a new method of rewinding application state during fuzzing. We saw our first results with this approach in 2021 using an experimental prototype that would later become the open source snapshot fuzzing tool called “Nyx”.

As of 2024, we are happy to announce that we are now running various snapshot fuzzing targets for IPC in production. Snapshot fuzzing is a new technology that has become more popular in recent years and we are proud of our role in bringing it from concept to practicality.

Using this technology we have already been able to identify and fix a number of potential problems in our IPC layer and we will continue to improve our testing to provide you with the most secure version of Firefox.

If you’d like to know more, or even consider contributing to Mozilla, check out our post on the security blog explaining the technical architecture behind this new tool.

The post Snapshots for IPC Fuzzing appeared first on Mozilla Hacks - the Web developer blog.

]]>The post Sponsoring sqlite-vec to enable more powerful Local AI applications appeared first on Mozilla Hacks - the Web developer blog.

]]>As a part of Mozilla Builders, we’ve launched an accelerator that developers can apply to join, but in parallel we have also been proactively recruiting specific open source projects that we feel have the potential to move AI forward and would benefit from Mozilla’s investment, expertise, and support. Our first such Builders project is llamafile, led by open source developer Justine Tunney. llamafile makes open LLMs run fast on everyday consumer hardware while also making open source AI dramatically more accessible and usable.

Today we’re proud to announce the next Mozilla Builders project: sqlite-vec. Led by independent developer Alex Garcia, this project brings vector search functionality to the beloved SQLite embedded database.

Alex has been working on this problem for a while, and we think his latest approach will have a great impact by providing application developers with a powerful new tool for building Local AI applications.

“I’m very excited for sqlite-vec to be a Mozilla Builders project”, said Alex Garcia. “I care a lot about building software that is easy to get started with and works everywhere, a trait obviously shared by other Builders projects like llamafile. AI tools are no exception — a vector database that runs everywhere means more equitable access for everyone.”

Vector databases are emerging as a key component of the AI application stack, supporting uses like retrieval augmented generation (RAG) and semantic search. But few of today’s available databases are designed for on-device use, making it harder to offer functionality like RAG in Local AI apps. SQLite is a mature and widely-deployed embedded database – in fact, it’s even built-into Mozilla’s own Firefox web browser.

The prospect of a vector-enabled SQLite opens up many new possibilities for locally-running AI applications. For example, imagine a chatbot that can answer questions about your personal data without letting a single byte of that data leave the privacy and safety of your laptop.

We’re excited to be working with Alex and supporting his efforts on sqlite-vec. We encourage you to follow the project’s progress, and Alex welcomes your contributions. And Mozilla’s Discord server is a great place to connect with Alex, the Mozilla Builders team, and everyone else in our growing community of open source practitioners. Please stop by and introduce yourself.

The post Sponsoring sqlite-vec to enable more powerful Local AI applications appeared first on Mozilla Hacks - the Web developer blog.

]]>The post Experimenting with local alt text generation in Firefox Nightly appeared first on Mozilla Hacks - the Web developer blog.

]]>Why alt text?

Web pages have a fundamentally simple structure, with semantics that allow the browser to interpret the same content differently for different people based on their own needs and preferences. This is a big part of what we think makes the Web special, and what enables the browser to act as a user agent, responsible for making the Web work for people.

This is particularly useful for assistive technology such as screen readers, which are able to work alongside browser features to reduce obstacles for people to access and exchange information. For static web pages, this generally can be accomplished with very little interaction from the site, and this access has been enormously beneficial to many people.

But even for a simple static page there are certain types of information, like alternative text for images, that must be provided by the author to provide an understandable experience for people using assistive technology (as required by the spec). Unfortunately, many authors don’t do this: the Web Almanac reported in 2022 that nearly half of images were missing alt text.

Until recently it’s not been feasible for the browser to infer reasonably high quality alt text for images, without sending potentially sensitive data to a remote server. However, latest developments in AI have enabled this type of image analysis to happen efficiently, even on a CPU.

We are adding a feature within the PDF editor in Firefox Nightly to validate this approach. As we develop it further and learn from the deployment, our goal is to offer it for users who’d like to use it when browsing to help them better understand images which would otherwise be inaccessible.

Generating alt text with small open source models

We are using Transformer-based machine learning models to describe images. These models are getting good at describing the contents of the image, yet are compact enough to operate on devices with limited resources. While can’t outperform a large language model like GPT-4 Turbo with Vision, or LLaVA, they are sufficiently accurate to provide valuable insights on-device across a diversity of hardware.

Model architectures like BLIP or even VIT that were trained on datasets like COCO (Common Object In Context) or Flickr30k are good at identifying objects in an image. When combined with a text decoder like OpenAI’s GPT-2, they can produce alternative text with 200M or fewer parameters. Once quantized, these models can be under 200MB on disk, and run in a couple of seconds on a laptop – a big reduction compared to the gigabytes and resources an LLM requires.

Example Output

The image below (pulled from the COCO dataset) is described by:

- FIREFOX – our 182M parameters model using a Distilled version of GPT-2 alongside a Vision Transformer (ViT) image encoder.

- BASELINE MODEL – a slightly bigger ViT+GPT-2 model

- HUMAN TEXT – the description provided by the dataset annotator.

Both small models lose accuracy compared to the description provided by a person, and the baseline model is confused by the hands position. The Firefox model is doing slightly better in that case, and captures what is important.

What matters can be suggestive in any case. Notice how the person did not write about the office settings or the cherries on the cake, and specified that the candles were long.

If we run the same image on a model like GPT-4o, the results are extremely detailed:

The image depicts a group of people gathered around a cake with lit candles. The focus is on the cake, which has a red jelly topping and a couple of cherries. There are several lit candles in the foreground. In the background, there is a woman smiling, wearing a gray turtleneck sweater, and a few other people can be seen, likely in an office or indoor setting. The image conveys a celebratory atmosphere, possibly a birthday or a special occasion.

But such level of detail in alt text is overwhelming and doesn’t prioritize the most important information. Brevity is not the only goal, but it’s a helpful starting point, and pithy accuracy in a first draft allows content creators to focus their edits on missing context and details.

So if we ask the LLM for a one-sentence description, we get:

A group of people in an office celebrates with a lit birthday cake in the foreground and a smiling woman in the background.

This has more detail than our small model, but can’t be run locally without sending your image to a server.

Small is beautiful

Running inference locally with small models offers many advantages:

- Privacy: All operations are contained within the device, ensuring data privacy. We won’t have access to your images, PDF content, generated captions, or final captions. Your data will not be used to train the model.

- Resource Efficiency: Small models eliminate the need for high-powered GPUs in the cloud, reducing resource consumption and making it more environmentally friendly.

- Increased Transparency: In-house management of models allows for direct oversight of the training datasets, offering more transparency compared to some large language models (LLMs).

- Carbon Footprint Monitoring: Training models in-house facilitates precise tracking of CO2 emissions using tools such as CodeCarbon.

- Ease of Improvement: Since retraining can be completed in less than a day on a single piece of hardware, it allows for frequent updates and enhancements of the model.

Integrating Local Inference into Firefox

Extending the Translations inference architecture

Firefox Translations uses the Bergamot project powered by the Marian C++ inference runtime. The runtime is compiled into WASM, and there’s a model file for each translation task.

For example, if you run Firefox in French and visit an English page, Firefox will ask if you want to translate it to French and download the English-to-French model (~20MiB) alongside the inference runtime. This is a one-shot download: translations will happen completely offline once those files are on disk.

The WASM runtime and models are both stored in the Firefox Remote Settings service, which allows us to distribute them at scale and manage versions.

The inference task runs in a separate process, which prevents the browser or one of its tabs from crashing if the inference runtime crashes.

ONNX and Transformers.js

We’ve decided to embed the ONNX runtime in Firefox Nightly along with the Transformers.js library to extend the translation architecture to perform different inference work.

Like Bergamot, the ONNX runtime has a WASM distribution and can run directly into the browser. The ONNX project has recently introduced WebGPU support, which will eventually be activated in Firefox Nightly for this feature.

Transformers.js provides a Javascript layer on top of the ONNX inference runtime, making it easy to add inference for a huge list of model architectures. The API mimics the very popular Python library. It does all the tedious work of preparing the data that is passed to the runtime and converting the output back to a usable result. It also deals with downloading models from Hugging Face and caching them.

From the project’s documentation, this is how you can run a sentiment analysis model on a text:

import { pipeline } from '@xenova/transformers';

// Allocate a pipeline for sentiment-analysis

let pipe = await pipeline('sentiment-analysis');

let out = await pipe('I love transformers!');

// [{'label': 'POSITIVE', 'score': 0.999817686}]

Using Transformers.js gives us confidence when trying out a new model with ONNX. If its architecture is listed in the Transformers.js documentation, that’s a good indication it will work for us.

To vendor it into Firefox Nightly, we’ve slightly changed its release to distribute ONNX separately from Transformers.js, dropped Node.js-related pieces, and fixed those annoying eval() calls the ONNX library ships with. You can find the build script here which was used to populate that vendor directory.

From there, we reused the Translation architecture to run the ONNX runtime inside its own process, and have Transformers.js run with a custom model cache system.

Model caching

The Transformers.js project can use local and remote models and has a caching mechanism using the browser cache. Since we are running inference in an isolated web worker, we don’t want to provide access to the file system or store models inside the browser cache. We also don’t want to use Hugging Face as the model hub in Firefox, and want to serve model files from our own servers.

Since Transformers.js provides a callback for a custom cache, we have implemented a specific model caching layer that downloads files from our own servers and caches them in IndexedDB.

As the project grows, we anticipate the browser will store more models, which can take up significant space on disk. We plan to add an interface in Firefox to manage downloaded models so our users can list them and remove some if needed.

Fine-tuning a ViT + GPT-2 model

Ankur Kumar released a popular model on Hugging Face to generate alt text for images and blogged about it. This model was also published as ONNX weights by Joshua Lochner so it could be used in Transformers.js, see https://huggingface.co/Xenova/vit-gpt2-image-captioning

The model is doing a good job – even if in some cases we had better results with https://huggingface.co/microsoft/git-base-coco – But the GIT architecture is not yet supported in ONNX converters, and with less than 200M params, most of the accuracy is obtained by focusing on good training data. So we have picked ViT for our first model.

Ankur used the google/vit-base-patch16-224-in21k image encoder and the GPT-2 text decoder and fine-tuned them using the COCO dataset, which is a dataset of over 120k labeled images.

In order to reduce the model size and speed it up a little bit, we’ve decided to replace GPT-2 with DistilGPT-2 — which is 2 times faster and 33% smaller according to its documentation.

Using that model in Transformers.js gave good results (see the training code at GitHub – mozilla/distilvit: image-to-text model for PDF.js).

We further improved the model for our use case with an updated training dataset and some supervised learning to simplify the output and mitigate some of the biases common in image to text models.

Alt text generation in PDF.js

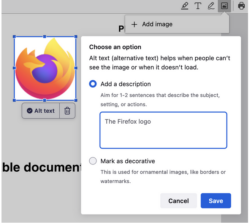

Firefox is able to add an image in a PDF using our popular open source pdf.js library:

Starting in Firefox 130, we will automatically generate an alt text and let the user validate it. So every time an image is added, we get an array of pixels we pass to the ML engine and a few seconds after, we get a string corresponding to a description of this image (see the code).

The first time the user adds an image, they’ll have to wait a bit for downloading the model (which can take up to a few minutes depending on your connection) but the subsequent uses will be much faster since the model will be stored locally.

In the future, we want to be able to provide an alt text for any existing image in PDFs, except images which just contain text (it’s usually the case for PDFs containing scanned books).

Next steps

Our alt text generator is far from perfect, but we want to take an iterative approach and improve it in the open. The inference engine has already landed in Firefox Nightly as a new ml component along with an initial documentation page.

We are currently working on improving the image-to-text datasets and model with what we’ve described in this blog post, which will be continuously updated on our Hugging Face page.

The code that produces the model lives in Github https://github.com/mozilla/distilvit and the web application we’re building for our team to improve the model is located at https://github.com/mozilla/checkvite. We want to make sure the models and datasets we build, and all the code used, are made available to the community.

Once the alt text feature in PDF.js has matured and proven to work well, we hope to make the feature available in general browsing for users with screen readers.

The post Experimenting with local alt text generation in Firefox Nightly appeared first on Mozilla Hacks - the Web developer blog.

]]>The post Llamafile’s progress, four months in appeared first on Mozilla Hacks - the Web developer blog.

]]>Through it all, lead developer and project visionary Justine Tunney has remained hard at work on a wide variety of fundamental improvements to the project. Just last night, Justine shipped the v0.8 release of llamafile, which includes not only support for the very latest open models, but also a number of big performance improvements for CPU inference.

As a result of Justine’s work, today llamafile is both the easiest and fastest way to run a wide range of open large language models on your own hardware. See for yourself: with llamafile, you can run Meta’s just-released LLaMA 3 model–which rivals the very best models available in its size class–on an everyday Macbook.

How did we do it? To explain that, let’s take a step back and tell you about everything that’s changed since v0.1.

tinyBLAS: democratizing GPU support for NVIDIA and AMD

llamafile is built atop the now-legendary llama.cpp project. llama.cpp supports GPU-accelerated inference for NVIDIA processors via the cuBLAS linear algebra library, but that requires users to install NVIDIA’s CUDA SDK. We felt uncomfortable with that fact, because it conflicts with our project goal of building a fully open-source and transparent AI stack that anyone can run on commodity hardware. And besides, getting CUDA set up correctly can be a bear on some systems. There had to be a better way.

With the community’s help (here’s looking at you, @ahgamut and @mrdomino!), we created our own solution: it’s called tinyBLAS, and it’s llamafile’s brand-new and highly efficient linear algebra library. tinyBLAS makes NVIDIA acceleration simple and seamless for llamafile users. On Windows, you don’t even need to install CUDA at all; all you need is the display driver you’ve probably already installed.

But tinyBLAS is about more than just NVIDIA: it supports AMD GPUs, as well. This is no small feat. While AMD commands a respectable 20% of today’s GPU market, poor software and driver support have historically made them a secondary player in the machine learning space. That’s a shame, given that AMD’s GPUs offer high performance, are price competitive, and are widely available.

One of llamafile’s goals is to democratize access to open source AI technology, and that means getting AMD a seat at the table. That’s exactly what we’ve done: with llamafile’s tinyBLAS, you can now easily make full use of your AMD GPU to accelerate local inference. And, as with CUDA, if you’re a Windows user you don’t even have to install AMD’s ROCm SDK.

All of this means that, for many users, llamafile will automatically use your GPU right out of the box, with little to no effort on your part.

CPU performance gains for faster local AI

Here at Mozilla, we are keenly interested in the promise of “local AI,” in which AI models and applications run directly on end-user hardware instead of in the cloud. Local AI is exciting because it opens up the possibility of more user control over these systems and greater privacy and security for users.

But many consumer devices lack the high-end GPUs that are often required for inference tasks. llama.cpp has been a game-changer in this regard because it makes local inference both possible and usably performant on CPUs instead of just GPUs.

Justine’s recent work on llamafile has now pushed the state of the art even further. As documented in her detailed blog post on the subject, by writing 84 new matrix multiplication kernels she was able to increase llamafile’s prompt evaluation performance by an astonishing 10x compared to our previous release. This is a substantial and impactful step forward in the quest to make local AI viable on consumer hardware.

This work is also a great example of our commitment to the open source AI community. After completing this work we immediately submitted a PR to upstream these performance improvements to llama.cpp. This was just the latest of a number of enhancements we’ve contributed back to llama.cpp, a practice we plan to continue.

Raspberry Pi performance gains

Speaking of consumer hardware, there are few examples that are both more interesting and more humble than the beloved Raspberry Pi. For a bargain basement price, you get a full-featured computer running Linux with plenty of computing power for typical desktop uses. It’s an impressive package, but historically it hasn’t been considered a viable platform for AI applications.

Not any more. llamafile has now been optimized for the latest model (the Raspberry Pi 5), and the result is that a number of small LLMs–such as Rocket-3B (download), TinyLLaMA-1.5B (download), and Phi-2 (download)–run at usable speeds on one of the least expensive computers available today. We’ve seen prompt evaluation speeds of up to 80 tokens/sec in some cases!

Keeping up with the latest models

The pace of progress in the open model space has been stunningly fast. Over the past few months, hundreds of models have been released or updated via fine-tuning. Along the way, there has been a clear trend of ever-increasing model performance and ever-smaller model sizes.

The llama.cpp project has been doing an excellent job of keeping up with all of these new models, frequently rolling-out support for new architectures and model features within days of their release.

For our part we’ve been keeping llamafile closely synced with llama.cpp so that we can support all the same models. Given the complexity of both projects, this has been no small feat, so we’re lucky to have Justine on the case.

Today, you can today use the very latest and most capable open models with llamafile thanks to her hard work. For example, we were able to roll-out llamafiles for Meta’s newest LLaMA 3 models–8B-Instruct and 70B-Instruct–within a day of their release. With yesterday’s 0.8 release, llamafile can also run Grok, Mixtral 8x22B, and Command-R.

Creating your own llamafiles

Since the day that llamafile shipped people have wanted to create their own llamafiles. Previously, this required a number of steps, but today you can do it with a single command, e.g.:

llamafile-convert [model.gguf]

In just moments, this will produce a “model.llamafile” file that is ready for immediate use. Our thanks to community member @chan1012 for contributing this helpful improvement.

In a related development, Hugging Face recently added official support for llamafile within their model hub. This means you can now search and filter Hugging Face specifically for llamafiles created and distributed by other people in the open source community.

OpenAI-compatible API server

Since it’s built on top of llama.cpp, llamafile inherits that project’s server component, which provides OpenAI-compatible API endpoints. This enables developers who are building on top of OpenAI to switch to using open models instead. At Mozilla we very much want to support this kind of future: one where open-source AI is a viable alternative to centralized, closed, commercial offerings.

While open models do not yet fully rival the capabilities of closed models, they’re making rapid progress. We believe that making it easier to pivot existing code over to executing against open models will increase demand and further fuel this progress.

Over the past few months, we’ve invested effort in extending these endpoints, both to increase functionality and improve compatibility. Today, llamafile can serve as a drop-in replacement for OpenAI in a wide variety of use cases.

We want to further extend our API server’s capabilities, and we’re eager to hear what developers want and need. What’s holding you back from using open models? What features, capabilities, or tools do you need? Let us know!

Integrations with other open source AI projects

Finally, it’s been a delight to see llamafile adopted by independent developers and integrated into leading open source AI projects (like Open Interpreter). Kudos in particular to our own Kate Silverstein who landed PRs that add llamafile support to LangChain and LlamaIndex (with AutoGPT coming soon).

If you’re a maintainer or contributor to an open source AI project that you feel would benefit from llamafile integration, let us know how we can help.

Join us!

The llamafile project is just getting started, and it’s also only the first step in a major new initiative on Mozilla’s part to contribute to and participate in the open source AI community. We’ll have more to share about that soon, but for now: I invite you to join us on the llamafile project!

The best place to connect with both the llamafile team at Mozilla and the overall llamafile community is over at our Discord server, which has a dedicated channel just for llamafile. And of course, your enhancement requests, issues, and PRs are always welcome over at our GitHub repo.

I hope you’ll join us. The next few months are going to be even more interesting and unexpected than the last, both for llamafile and for open source AI itself.

The post Llamafile’s progress, four months in appeared first on Mozilla Hacks - the Web developer blog.

]]>The post Porting a cross-platform GUI application to Rust appeared first on Mozilla Hacks - the Web developer blog.

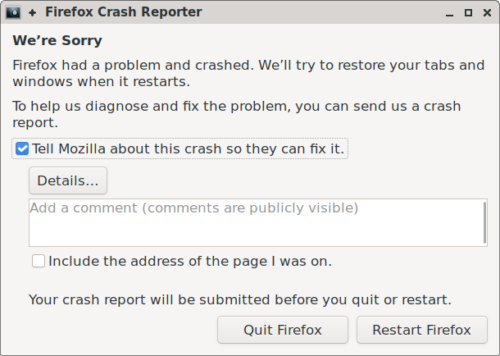

]]>This post details the approach we have taken to rewrite the crash reporter in Rust. We discuss the reasoning behind this rewrite, what makes the crash reporter a unique application, the architecture we used, and some details of the implementation.

Why Rewrite?

Even though it is important to properly handle main process crashes, the crash reporter hasn’t received significant development in a while (aside from development to ensure that crash reports and telemetry continue to reliably be delivered)! It has long been stuck in a local maximum of “good enough” and “scary to maintain”: it features 3 individual GUI implementations (for Windows, GTK+ for Linux, and macOS), glue code abstracting a few things (mostly in C++, and Objective-C for macOS), a binary blob produced by obsoleted Apple development tools, and no test suite. Because of this, there is a backlog of features and improvements which haven’t been acted on.

We’ve recently had a number of successful pushes to decrease crash rates (including both big leaps and many small bug fixes), and the crash reporter has functioned well enough for our needs during this time. However, we’ve reached an inflection point where improving the crash reporter would provide valuable insight to enable us to decrease the crash rate even further. For the reasons previously mentioned, improving the current codebase is difficult and error-prone, so we deemed it appropriate to rewrite the application so we can more easily act on the feature backlog and improve crash reports.

Like many components of Firefox, we decided to use Rust for this rewrite to produce a more reliable and maintainable program. Besides the often-touted memory safety built into Rust, its type system and standard library make reasoning about code, handling errors, and developing cross-platform applications far more robust and comprehensive.

Crash Reporting is an Edge Case

There are a number of features of the crash reporter which make it quite unique, especially compared to other components which have been ported to Rust. For one thing, it is a standalone, individual program; basically no other components of Firefox are used in this way. Firefox itself launches many processes as a means of sandboxing and insulating against crashes, however these processes all talk to one another and have access to the same code base.

The crash reporter has a very unique requirement: it must use as little as possible of the Firefox code base, ideally none! We don’t want it to rely on code which may be buggy and cause the reporter itself to crash. Using a completely independent implementation ensures that when a main process crash does occur, the cause of that crash won’t affect the reporter’s functionality as well.

The crash reporter also necessarily has a GUI. This alone may not separate it from other Firefox components, but we can’t leverage any of the cross-platform rendering goodness that Firefox provides! So we need to implement a cross-platform GUI independent of Firefox as well. You might think we could reach for an existing cross-platform GUI crate, however we have a few reasons not to do so.

- We want to minimize the use of external code: to improve crash reporter reliability (which is paramount), we want it to be as simple and auditable as possible.

- Firefox vendors all dependencies in-tree, so we are hesitant to bring in large dependencies (GUI libraries are likely pretty sizable).

- There are only a few third-party crates that provide a native OS look and feel (or actually use native GUI APIs): it’s desirable for the crash reporter to have a native feel to be familiar to users and take advantage of accessibility features.

So all of this is to say that third-party cross-platform GUI libraries aren’t a favorable option.

These requirements significantly narrow the approach that can be used.

Building a GUI View Abstraction

In order to make the crash reporter more maintainable (and make it easier to add new features in the future), we want to have as minimal and generic platform-specific code as possible. We can achieve this by using a simple UI model that can be converted into native GUI code for each platform. Each UI implementation will need to provide two methods (over arbitrary platform-specific &self data):

/// Run a UI loop, displaying all windows of the application until it terminates.

fn run_loop(&self, app: model::Application)

/// Invoke a function asynchronously on the UI loop thread.

fn invoke(&self, f: model::InvokeFn)

The run_loop function is pretty self-explanatory: the UI implementation takes an Application model (which we’ll discuss shortly) and runs the application, blocking until the application is complete. Conveniently, our target platforms generally have similar assumptions around threading: the UI runs in a single thread and typically runs an event loop which blocks on new events until an event signaling the end of the application is received.

There are some cases where we’ll need to run a function on the UI thread asynchronously (like displaying a window, updating a text field, etc). Since run_loop blocks, we need the invoke method to define how to do this. This threading model will make it easy to use the platform GUI frameworks: everything calling native functions will occur on a single thread (the main thread in fact) for the duration of the program.

This is a good time to be a bit more specific about exactly what each UI implementation will look like. We’ll discuss pain points for each later on. There are 4 UI implementations:

- A Windows implementation using the Win32 API.

- A macOS implementation using Cocoa (AppKit and Foundation frameworks).

- A Linux implementation using GTK+ 3 (the “+” has since been dropped in GTK 4, so henceforth I’ll refer to it as “GTK”). Linux doesn’t provide its own GUI primitives, and we already ship GTK with Firefox on Linux to make a modern-feeling GUI, so we can use it for the crash reporter, too. Note that some platforms that aren’t directly supported by Mozilla (like BSDs) use the GTK implementation as well.

- A testing implementation which will allow tests to hook into a virtual UI and poke things (to simulate interactions and read state).

One last detail before we dive in: the crash reporter (at least right now) has a pretty simple GUI. Because of this, an explicit non-goal of the development was to create a separate Rust GUI crate. We wanted to create just enough of an abstraction to cover the cases we needed in the crash reporter. If we need more controls in the future, we can add them to the abstraction, but we avoided spending extra cycles to fill out every GUI use case.

Likewise, we tried to avoid unnecessary development by allowing some tolerance for hacks and built-in edge cases. For example, our model defines a Button as an element which contains an arbitrary element, but actually supporting that with Win32 or AppKit would have required a lot of custom code, so we special case on a Button containing a Label (which is all we need right now, and an easy primitive available to us). I’m happy to say there aren’t really many special cases like that at all, but we are comfortable with the few that were needed.

The UI Model

Our model is a declarative structuring of concepts mostly present in GTK. Since GTK is a mature library with proven high-level UI concepts, this made it appropriate for our abstraction and made the GTK implementation pretty simple. For instance, the simplest way that GTK does layout (using container GUI elements and per-element margins/alignments) is good enough for our GUI, so we use similar definitions in the model. Notably, this “simple” layout definition is actually somewhat high-level and complicates the macOS and Windows implementations a bit (but this tradeoff is worth the ease of creating UI models).

The top-level type of our UI model is Application. This is pretty simple: we define an Application as a set of top-level Windows (though our application only has one) and whether the current locale is right-to-left. We inspect Firefox resources to use the same locale that Firefox would, so we don’t rely on the native GUI’s locale settings.

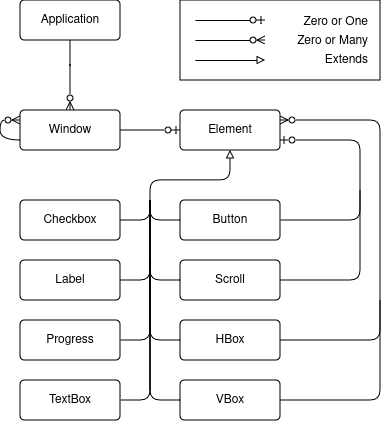

As you might expect, each Window contains a single root element. The rest of the model is made up of a handful of typical container and primitive GUI elements:

The crash reporter only needs 8 types of GUI elements! And really, Progress is used as a spinner rather than indicating any real progress as of right now, so it’s not strictly necessary (but nice to show).

Rust does not explicitly support the object-oriented concept of inheritance, so you might be wondering how each GUI element “extends” Element. The relationship represented in the picture is somewhat abstract; the implemented Element looks like:

pub struct Element {

pub style: ElementStyle,

pub element_type: ElementType

}

where ElementStyle contains all the common properties of elements (alignment, size, margin, visibility, and enabled state), and ElementType is an enum containing each of the specific GUI elements as variants.

Building the Model

The model elements are all intended to be consumed by the UI implementations; as such, almost all of the fields have public visibility. However, as a means of having a separate interface for building elements, we define an ElementBuilder<T> type. This type has methods that maintain assertions and provide convenience setters. For instance, many methods accept parameters that are impl Into<MemberType>, some methods like margin() set multiple values (but you can be more specific with margin_top()), etc.

There is a general impl<T> ElementBuilder<T> which provides setters for the various ElementStyle properties, and then each specific element type can also provide their own impl ElementBuilder<SpecificElement> with additional properties unique to the element type.

We combine ElementBuilder<T> with the final piece of the puzzle: a ui! macro. This macro allows us to write our UI in a declarative manner. For example, it allows us to write:

let details_window = ui! {

Window title("Crash Details") visible(show_details) modal(true) hsize(600) vsize(400)

halign(Alignment::Fill) valign(Alignment::Fill)

{

VBox margin(10) spacing(10) halign(Alignment::Fill) valign(Alignment::Fill) {

Scroll halign(Alignment::Fill) valign(Alignment::Fill) {

TextBox content(details) halign(Alignment::Fill) valign(Alignment::Fill)

},

Button halign(Alignment::End) on_click(move || *show_details.borrow_mut() = false)

{

Label text("Ok")

}

}

}

};

The implementation of ui! is fairly simple. The first identifier provides the element type and an ElementBuilder<T> is created. After that, the remaining method-call-like syntax forms are called on the builder (which is mutable).

Optionally, a final set of curly braces indicate that the element has children. In that case, the macro is recursively called to create them, and add_child is called on the builder with the result (so we just need to make sure a builder has an add_child method). Ultimately the syntax transformation is pretty simple, but I believe that this macro is a little bit more than just syntax sugar: it makes reading and editing the UI a fair bit clearer, since the hierarchy of elements is represented in the syntax. Unfortunately a downside is that there’s no way to support automatic formatting of such macro DSLs, so developers will need to maintain a sane formatting.

So now we have a model defined and a declarative way of building it. But we haven’t discussed any dynamic runtime behaviors here. In the above example, we see an on_click handler being set on a Button. We also see things like the Window’s visible property being set to a show_details value which is changed when on_click is pressed. We hook into this declarative UI to change or react to events at runtime using a set of simple data binding primitives with which UI implementations can interact.

Many GUI frameworks nowadays (both for Rust and other languages) have been built with the “diffing element trees” architecture (think React), where your code is (at least mostly) functional and side-effect-free and produces the GUI view as a function of the current state. This approach has its tradeoffs: for instance, it makes complicated, stateful alterations of the layout very simple to write, understand, and maintain, and encourages a clean separation of model and view! However since we aren’t writing a framework, and our application is and will remain fairly simple, the benefits of such an architecture were not worth the additional development burden. Our implementation is more similar to the MVVM architecture:

- the model is, well, the model discussed here;

- the views are the various UI implementations; and

- the viewmodel is (loosely, if you squint) the collection of data bindings.

Data Binding

There are a few types which we use to declare dynamic (runtime-changeable) values. In our UI, we needed to support a few different behaviors:

- triggering events, i.e., what happens when a button is clicked,

- synchronized values which will mirror and notify of changes to all clones, and

- on-demand values which can be queried for the current value.

On-demand values are used to get textbox contents rather than using a synchronized value, in an effort to avoid implementing debouncing in each UI. It may not be terribly difficult to do so, but it also wasn’t difficult to support the on-demand implementation.

As a means of convenience, we created a Property type which encompasses the value-oriented fields as well. A Property<T> can be set to either a static value (T), a synchronized value (Synchronized<T>), or an on-demand value (OnDemand<T>). It supports an impl From for each of these, so that builder methods can look like fn my_method(&mut self, value: impl Into<Property<T>>) allowing any supported value to be passed in a UI declaration.

We won’t discuss the implementation in depth (it’s what you’d expect), but it’s worth noting that these are all Clone to easily share the data bindings: they use Rc (we don’t need thread safety) and RefCell as necessary to access callbacks.

In the example from the last section, show_details is a Synchronized<bool> value. When it changes, the UI implementations change the associated window visibility. The Button on_click callback sets the synchronized value to false, hiding the window (note that the details window used in this example is never closed, it is just shown and hidden).

In a former iteration, data binding types had a lifetime parameter which specified the lifetime for which event callbacks were valid. While we were able to make this work, it greatly complicated the code, especially because there’s no way to communicate the correct covariance of the lifetime to the compiler, so there was additional unsafe code transmuting lifetimes (though it was contained as an implementation detail). These lifetimes were also infectious, requiring some of the complicated semantics regarding their safety to be propagated into the model types which stored Property fields.

Much of this was to avoid cloning values into the callbacks, but changing these types to all be Clone and store static-lifetime callbacks was worth making the code far more maintainable.

Threading and Thread Safety

The careful reader might remember that we discussed how our threading model involves interacting with the UI implementations only on the main thread. This includes updating the data bindings, since the UI implementations might have registered callbacks on them! While we could run everything in the main thread, it’s generally a much better experience to do as much off of the UI thread as possible, even if we don’t do much that’s blocking (though we will be blocking when we send crash reports). We want our business logic to default to being off of the main thread so that the UI doesn’t ever freeze. We can guarantee this with some careful design.

The simplest way to guarantee this behavior is to put all of the business logic in one (non-Clone, non-Sync) type (let’s call it Logic) and construct the UI and UI state (like Property values) in another type (let’s call it UI). We can then move the Logic value into a separate thread to guarantee that UI can’t interact with Logic directly, and vice versa. Of course we do need to communicate sometimes! But we want to ensure that this communication will always be delegated to the thread which owns the values (rather than the values directly interacting with each other).

We can accomplish this by creating an enqueuing function for each type and storing that in the opposite type. Such a function will be passed boxed functions to run on the owning thread that get a reference to the owned type (e.g., Box<dyn FnOnce(&T) + Send + 'static>). This is simple to create: for the UI thread, it is just the UI implementation’s invoke method which we briefly discussed previously. The Logic thread does nothing but run a loop which will get these functions and run them on the owned value (we just enqueue and pass them using an mpsc::channel). Now each type can asynchronously call methods on the other with the guarantee that they’ll be run on the correct thread.

In a former iteration, a more complicated scheme was used with thread-local storage and a central type which was responsible for both creating threads and delegating the functions. But with such a basic use case as two threads delegating between each other, we were able to distill this to the essential aspects needed, greatly simplifying the code.

Localization

One nice benefit of this rewrite is that we could bring the localization of the crash reporter up to speed with our modern tooling. In almost every other part of Firefox, we use fluent to handle localization. Using fluent in the crash reporter makes the experience of localizers more uniform and predictable; they do not need to understand more than one localization system (the crash reporter was one of the last holdouts of the old system). It was very easy to use in the new code, with just a bit of extra code to extract the localization files from the Firefox installation when the crash reporter is run. In the worst case scenario where we can’t find or access these files, we have the en-US definitions directly bundled in the crash reporter binary.

The UI Implementations

We won’t go into much detail about the implementations, but it’s worth talking about each a bit.

Linux (GTK)

The GTK implementation is probably the most straightforward and succinct. We use bindgen to generate Rust bindings to the GTK functions we need (avoiding vendoring any external crates). Then we simply call all of the corresponding GTK functions to set up the GTK widgets as described in the model (remember, the model was made to mirror some of the GTK concepts).

Since GTK is somewhat modern and meant to be written by humans (not automated tools like some of the other platforms), there weren’t really any pain points or unusual behaviors that needed to be addressed.

We have a handful of nice features to improve memory safety and correctness. A set of traits makes it easy to attach owned data to GObjects (ensuring data remains valid and is properly dropped when the GObject is destroyed), and a few macros set up the glue code between GTK signals and our data binding types.

Windows (Win32)

The Windows implementation may have been the most difficult to write, since Win32 GUIs are very rarely written nowadays and the API shows its age. We use the windows-sys crate to access bindings to the API (which was already vendored in the codebase for many other Windows API uses). This crate is generated directly from Windows function metadata (by Microsoft), but otherwise its bindings aren’t terribly different from what bindgen might have produced (though they are likely a bit more accurate).

There were a number of hurdles to overcome. For one thing, the Win32 API doesn’t provide any layout primitives, so the high-level layout concepts we use (which allow graceful resize/repositioning) had to be implemented manually. There’s also quite a few extra API calls just to get to a GUI that looks somewhat decent (correct window colors, font smoothing, high DPI handling, etc). Even the default font ends up being a terrible looking bitmapped font rather than the more modern system default; we needed to manually retrieve the system default and set it as the font to use, which was a bit surprising!

We have a set of traits to facilitate creating custom window classes and managing associated window data of class instances. We also have wrapper types to properly manage the lifetimes of handles and perform type conversions (mainly String to null-terminated wide strings and back) as an extra layer of safety around the API.

macOS (Cocoa/AppKit)

The macOS implementation had its tricky parts, as overwhelmingly macOS GUIs are written with XCode and there’s a lot of automated and generated portions (such as nibs). We again use bindgen to generate Rust bindings, this time for the Objective-C APIs in macOS framework headers.

Unlike Windows and GTK, you don’t get keyboard shortcuts like Cmd-C, Cmd-Q, etc, for free if creating a GUI without e.g. XCode (which generates it for you as part of a new project template). To have these typical shortcuts that users expect, we needed to manually implement the application main menu (which is what governs keyboard shortcuts). We also had to handle runtime setup like creating Objective-C autorelease pools, bringing the window and application (which are separate concepts) to the foreground, etc. Even implementing invoke to call a function on the main thread had its nuances, since modal windows use a nested event loop which would not call queued functions under the default NSRunLoop mode.

We wrote some simple helper types and a macro to make it easy to implement, register, and create Objective-C classes from Rust code. We used this for creating delegate classes as well as subclassing some controls for the implementation (like NSButton); it made it easy to safely manage the memory of Rust values underlying the classes and correctly register class method selectors.

The Test UI

We’ll discuss testing in the next section. Our testing UI is very simple. It doesn’t create a GUI, but allows us to interact directly with the model. The ui! macro supports an extra piece of syntax when tests are enabled to optionally set a string identifier for each element. We use these strings in unit tests to access and interact with the UI. The data binding types also support a few additional methods in tests to easily manipulate values. This UI allows us to simulate button presses, field entry, etc, to ensure that other UI state changes as expected as well as simulating the system side effects.

Mocking and Testing

An important goal of our rewrite was to add tests to the crash reporter; our old code was sorely lacking them (in part because unit testing GUIs is notoriously difficult).

Mocking Everything

In the new code, we can mock the crash reporter regardless of whether we are running tests or not (though it is always mocked for tests). This is important because mocking allows us to (manually) run the GUI in various states to check that the GUI implementations are correct and render well. Our mocking not only mocks the inputs to the crash reporter (environment variables, command line parameters, etc), it also mocks all side-effectful std functions.

We accomplish this by having a std module in the crate, and using crate::std throughout the rest of the code. When mocking is disabled, crate::std is simply the same as ::std. But when it is enabled, a bunch of functions that we have written are used instead. These mock the filesystem, environment, launching external commands, and other side effects. Importantly, only the minimal amount to mock the existing functions is implemented, so that if e.g. some new functions from std::fs, std::net, etc. are used, the crate will fail to compile with mocking enabled (so that we don’t miss any side effects). This might sound like a lot of effort, but you might be surprised at how little of std really needed to be mocked, and most implementations were pretty straightforward.

Now that we have our code using different mocked functions, we need to have a way of injecting the desired mock data (both in tests and in our normal mocked operation). For example, we have the ability to return some data when a File is read, but we need to be able to set that data differently for tests. Without going into too much detail, we accomplish this using a thread-local store of mock data. This way, we don’t need to change any code to accommodate the mock data; we only need to make changes where we set and retrieve it. The programming language enthusiasts out there may recognize this as a form of dynamic scoping. The implementation allows our mock data to be set with code like

mock::builder()

.set(

crate::std::env::MockCurrentExe,

"work_dir/crashreporter".into(),

)

.run(|| crash_reporter_main())

in tests, and

pub fn current_exe() -> std::io::Result {

Ok(MockCurrentExe.get(|r| r.clone()))

}

in our crate::std::env implementation.

Testing

With our mocking setup and test UI, we are able to extensively test the behavior of the crash reporter. The “last mile” of this testing which we can’t automate easily is whether each UI implementation faithfully represents the UI model. We manually test this with a mocked GUI for each platform.

Besides that, we are able to automatically test how arbitrary UI interactions cause the crash reporter to affect its own UI state and the environment (checking which programs are invoked and network connections are made, what happens if they fail, succeed, or timeout, etc). We also set up a mock filesystem and add assertions in various scenarios over the precise resulting filesystem state once the crash reporter completes. This greatly increases our confidence in the current behaviors and ensures that future changes will not alter them, which is of the utmost importance for such an essential component of our crash reporting pipeline.

The End Product

Of course we can’t get away with writing all of this without a picture of the crash reporter! This is what it looks like on Linux using GTK. The other GUI implementations look the same but styled with a native look and feel.

Note that, for now, we wanted to keep it looking exactly the same as it previously did. So if you are unfortunate enough to see it, it shouldn’t appear as if anything has changed!

With a new, cleaned up crash reporter, we can finally unblock a number of feature requests and bug reports, such as:

- detecting whether an installation is corrupt and telling the user to re-install Firefox,

- checking whether there is faulty memory hardware on the crashing system, and

- using the Firefox network stack for the first attempt at submitting crashes (which respects user network settings like proxies).

We are excited to iterate and improve further on crash reporter functionality. But ultimately it’d be wonderful if you never see or use it, and we are constantly working toward that goal!

The post Porting a cross-platform GUI application to Rust appeared first on Mozilla Hacks - the Web developer blog.

]]>The post Prototype even faster with the Gradio UI for Figma component library appeared first on Mozilla Hacks - the Web developer blog.

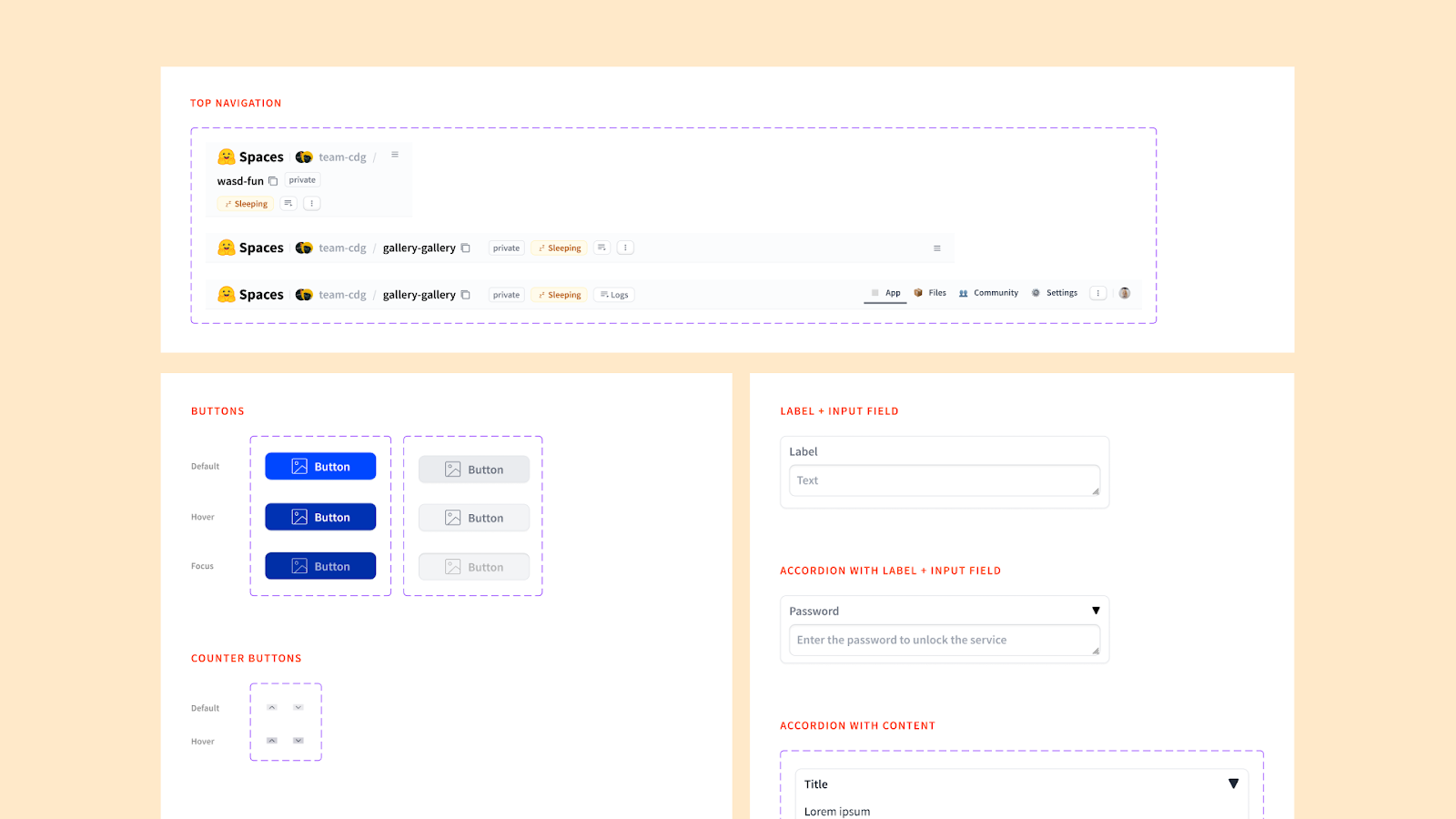

]]>Although Gradio has made the development phase of prototyping easier, the design phase has been largely the same. Even with Gradio, designers have had to create components in Figma, outline expected user flows and behaviors, and hand off designs for developers in the same way they have always done. While working on a recent exploration, we realized something was needed: a set of Figma components based on Gradio that enabled designers to create wireframes quickly.

Today, we are releasing our library of design components for Gradio for others to use. The components are based on version 4.23.0 of Gradio and will be available through our Figma profile: Mozilla Innovation Projects, https://www.figma.com/@futureatmozilla. We hope these components help teams accelerate their discovery and experimentation with ML and generative AI.

You can find out more about Gradio at https://www.gradio.app/ and more about innovation at Mozilla at https://future.mozilla.org

Thanks to Amy Chiu and Anais Ron who created the components and to the Gradio team for their work. Happy designing!

What’s Inside Gradio UI for Figma?

Because Gradio is an ever-changing prototyping kit, current components are based on version 4.23.0 of Gradio. We selected components based on their wide array of potential uses. Here is a list of the components inside the kit:

- Typography (e.g. headers, body fonts)

- Iconography (e.g. chevrons, arrows, corner expanders)

Small Components:

- Buttons

- Checkbox

- Radio

- Sliders

- Tabs

- Accordion

- Delete Button

- Error Message

- Media Type Labels

- Media Player Controller

Big Components:

- Label + Textbox

- Accordion with Label + Input

- Video Player

- Label + Counter

- Label + Slider

- Accordion + Label

- Checkbox with Label

- Radio with Label

- Accordion with Content

- Accordion with Label + Input

- Top navigation

How to Access and Use Gradio UI for Figma

To start using the library, follow these simple steps:

- Access the Library: Access the component library directly by visiting our public Figma profile (https://www.figma.com/@futureatmozilla) or by searching for “Gradio UI for Figma” within the Figma Community section of your web or desktop Figma application.

- Explore the Documentation: Familiarize yourself with the components and guidelines to make the most out of your design process.

- Connect with Us: Connect with us by following our Figma profile or emailing us at [email protected]

The post Prototype even faster with the Gradio UI for Figma component library appeared first on Mozilla Hacks - the Web developer blog.

]]>The post Improving Performance in Firefox and Across the Web with Speedometer 3 appeared first on Mozilla Hacks - the Web developer blog.

]]>This fulfills the vision set out in December 2022 to bring experts across the industry together in order to rethink how we measure browser performance, guided by a shared goal to reflect the real-world Web as much as possible. This is the first time the Speedometer benchmark, or any major browser benchmark, has been developed through a cross-industry collaboration supported by each major browser engine: Blink, Gecko, and WebKit. Working together means we can build a shared understanding of what matters to optimize, and facilitates broad review of the benchmark itself: both of which make it a stronger lever for improving the Web as a whole.

And we’re seeing results: Firefox got faster for real users in 2023 as a direct result of optimizing for Speedometer 3. This took a coordinated effort from many teams: understanding real-world websites, building new tools to drive optimizations, and making a huge number of improvements inside Gecko to make web pages run more smoothly for Firefox users. In the process, we’ve shipped hundreds of bug fixes across JS, DOM, Layout, CSS, Graphics, frontend, memory allocation, profile-guided optimization, and more.

We’re happy to see core optimizations in all the major browser engines turning into improved responsiveness for real users, and are looking forward to continuing to work together to build performance tests that improve the Web.

The post Improving Performance in Firefox and Across the Web with Speedometer 3 appeared first on Mozilla Hacks - the Web developer blog.

]]>The post Announcing Interop 2024 appeared first on Mozilla Hacks - the Web developer blog.

]]>The web platform is built on interoperability based on common standards. This offers users a degree of choice and control that sets the web apart from proprietary platforms defined by a single implementation. A commitment to ensuring that the web remains open and interoperable forms a fundamental part of Mozilla’s manifesto and web vision, and is why we’re so committed to shipping Firefox with our own Gecko engine.

However interoperability requires care and attention to maintain. When implementations ship with differences between the standard and each other, this creates a pain point for web authors; they have to choose between avoiding the problematic feature entirely and coding to specific implementation quirks. Over time if enough authors produce implementation-specific content then interoperability is lost, and along with it user agency.

This is the problem that the Interop Project is designed to address. By bringing browser vendors together to focus on interoperability, the project allows identifying areas where interoperability issues are causing problems, or may do in the near future. Tracking progress on those issues with a public metric provides accountability to the broader web community on addressing the problems.

The project works by identifying a set of high-priority focus areas: parts of the web platform where everyone agrees that making interoperability improvements will be of high value. These can be existing features where we know browsers have slightly different behaviors that are causing problems for authors, or they can be new features which web developer feedback shows is in high demand and which we want to launch across multiple implementations with high interoperability from the start. For each focus area a set of web-platform-tests is selected to cover that area, and the score is computed from the pass rate of these tests.

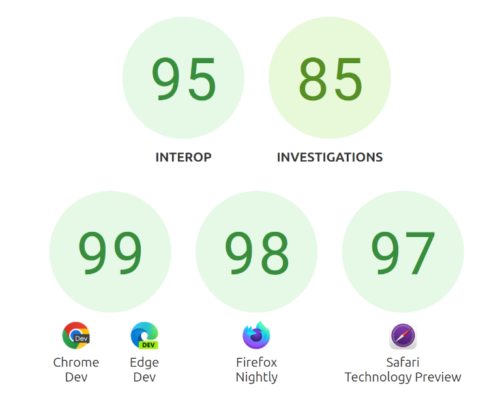

Interop 2023

The Interop 2023 project covered high profile features like the new :has() selector, and web-codecs, as well as areas of historically poor interoperability such as pointer events.

The results of the project speak for themselves: every browser ended the year with scores in excess of 97% for the prerelease versions of their browsers. Moreover, the overall Interoperability score — that is the fraction of focus area tests that pass in all participating browser engines — increased from 59% at the start of the year to 95% now. This result represents a huge improvement in the consistency and reliability of the web platform. For users this will result in a more seamless experience, with sites behaving reliably in whichever browser they prefer.

For the :has() selector — which we know from author feedback has been one of the most in-demand CSS features for a long time — every implementation is now passing 100% of the web-platform-tests selected for the focus area. Launching a major new platform feature with this level of interoperability demonstrates the power of the Interop project to progress the platform without compromising on implementation diversity, developer experience, or user choice.

As well as focus areas, the Interop project also has “investigations”. These are areas where we know that we need to improve interoperability, but aren’t at the stage of having specific tests which can be used to measure that improvement. In 2023 we had two investigations. The first was for accessibility, which covered writing many more tests for ARIA computed role and accessible name, and ensuring they could be run in different browsers. The second was for mobile testing, which has resulted in both Mobile Firefox and Chrome for Android having their initial results in wpt.fyi.

Interop 2024

Following the success of Interop 2023, we are pleased to confirm that the project will continue in 2024 with a new selection of focus areas, representing areas of the web platform where we think we can have the biggest positive impact on users and web developers.

New Focus Areas

New focus areas for 2024 include, among other things:

- Popover API – This provides a declarative mechanism to create content that always renders in the topmost-layer, so that it overlays other web page content. This can be useful for building features like tooltips and notifications. Support for popover was the #1 author request in the recent State of HTML survey.

- CSS Nesting – This is a feature that’s already shipping, which allows writing more compact and readable CSS files, without the need for external tooling such as preprocessors. However different browsers shipped slightly different behavior based on different revisions of the spec, and Interop will help ensure that everyone aligns on a single, reliable, syntax for this popular feature.

- Accessibility – Ensuring that the web is accessible to all users is a critical part of Mozilla’s manifesto. Our ability to include Accessibility testing in Interop 2024 is a direct result of the success of the Interop 2023 Accessibility Investigation in increasing the test coverage of key accessibility features.

The full list of focus areas is available in the project README.

Carryover

In addition to the new focus areas, we will carry over some of the 2023 focus areas where there’s still more work to be done. Of particular interest is the Layout focus area, which will combine the previous Flexbox, Grid and Subgrid focus area into one area covering all the most important layout primitives for the modern web. On top of that the Custom Properties, URL and Mouse and Pointer Events focus areas will be carried over. These represent cases where, even though we’ve already seen large improvements in Interoperability, we believe that users and web authors will benefit from even greater convergence between implementations.

Investigations

As well as focus areas, Interop 2024 will also feature a new investigation into improving the integration of WebAssembly testing into web-platform-tests. This will open up the possibility of including WASM features in future Interop projects. In addition we will extend the Accessibility and Mobile Testing investigations, as there is more work to be done to make those aspects of the platform fully testable across different implementations.

Partner Announcements

- Apple: The web just gets better with Interop, now for 2024

- Bocoup: Interop 2024

- Google: Interop 2024

- Igalia: Interop 2024 Launches

- Microsoft: Microsoft Edge and Interop 2024

The post Announcing Interop 2024 appeared first on Mozilla Hacks - the Web developer blog.

]]>The post Option Soup: the subtle pitfalls of combining compiler flags appeared first on Mozilla Hacks - the Web developer blog.

]]>During the Firefox 120 beta cycle, a new crash signature appeared on our radars with significant volume.

At that time, the distribution across operating systems revealed that more than 50% of the crash volume originates from Ubuntu 18.04 LTS users.

The main process crashes in a CanvasRenderer thread, with the following call stack:

0 firefox std::locale::operator= 1 firefox std::ios_base::imbue 2 firefox std::basic_ios<char, std::char_traits<char> >::imbue 3 libxul.so sh::InitializeStream<std::__cxx11::basic_ostringstream<char, std::char_traits<char>, std::allocator<char> > > /build/firefox-ZwAdKm/firefox-120.0~b2+build1/gfx/angle/checkout/src/compiler/translator/Common.h:238 3 libxul.so sh::TCompiler::setResourceString /build/firefox-ZwAdKm/firefox-120.0~b2+build1/gfx/angle/checkout/src/compiler/translator/Compiler.cpp:1294 4 libxul.so sh::TCompiler::Init /build/firefox-ZwAdKm/firefox-120.0~b2+build1/gfx/angle/checkout/src/compiler/translator/Compiler.cpp:407 5 libxul.so sh::ConstructCompiler /build/firefox-ZwAdKm/firefox-120.0~b2+build1/gfx/angle/checkout/src/compiler/translator/ShaderLang.cpp:368 6 libxul.so mozilla::webgl::ShaderValidator::Create /build/firefox-ZwAdKm/firefox-120.0~b2+build1/dom/canvas/WebGLShaderValidator.cpp:215 6 libxul.so mozilla::WebGLContext::CreateShaderValidator const /build/firefox-ZwAdKm/firefox-120.0~b2+build1/dom/canvas/WebGLShaderValidator.cpp:196 7 libxul.so mozilla::WebGLShader::CompileShader /build/firefox-ZwAdKm/firefox-120.0~b2+build1/dom/canvas/WebGLShader.cpp:98

At first glance, we want to blame WebGL. The C++ standard library functions cannot be at fault, right?

But when looking at the WebGL code, the crash occurs in the perfectly valid lines of C++ summarized below:

std::ostringstream stream; stream.imbue(std::locale::classic());

This code should never crash, and yet it does. In fact, taking a closer look at the stack gives a first lead for investigation:

Although we crash into functions that belong to the C++ standard library, these functions appear to live in the firefox binary.

This is an unusual situation that never occurs with official builds of Firefox.

It is however very common for distribution to change the configuration settings and apply downstream patches to an upstream source, no worries about that.

Moreover, there is only a single build of Firefox Beta that is causing this crash.

We know this thanks to a unique identifier associated with any ELF binary.

Here, if we choose any specific version of Firefox 120 Beta (such as 120b9), the crashes all embed the same unique identifier for firefox.

Now, how can we guess what build produces this weird binary?

A useful user comment mentions that they regularly experience this crash since updating to 120.0~b2+build1-0ubuntu0.18.04.1.

And by looking for this build identifier, we quickly reach the Firefox Beta PPA.

Then indeed, we are able to reproduce the crash by installing it in a Ubuntu 18.04 LTS virtual machine: it occurs when loading any WebGL page!

With the binary now at hand, running nm -D ./firefox confirms the presence of several symbols related to libstdc++ that live in the text section (T marker).

Templated and inline symbols from libstdc++ usually appear as weak (W marker), so there is only one explanation for this situation: firefox has been statically linked with libstdc++, probably through -static-libstdc++.

Fortunately, the build logs are available for all Ubuntu packages.

After some digging, we find the logs for the 120b9 build, which indeed contain references to -static-libstdc++.

But why?

Again, everything is well documented, and thanks to well trained digging skills we reach a bug report that provides interesting insights.

Firefox requires a modern C++ compiler, and hence a modern libstdc++, which is unavailable on old systems like Ubuntu 18.04 LTS.

The build uses -static-libstdc++ to close this gap.

This just explains the weird setup though.

What about the crash?

Since we can now reproduce it, we can launch Firefox in a debugger and continue our investigation.

When inspecting the crash site, we seem to crash because std::locale::classic() is not properly initialized.

Let’s take a peek at the implementation.

const locale& locale::classic()

{

_S_initialize();

return *(const locale*)c_locale;

}

_S_initialize() is in charge of making sure that c_locale will be properly initialized before we return a reference to it.

To achieve this, _S_initialize() calls another function, _S_initialize_once().

void locale::_S_initialize()

{

#ifdef __GTHREADS

if (!__gnu_cxx::__is_single_threaded())

__gthread_once(&_S_once, _S_initialize_once);

#endif

if (__builtin_expect(!_S_classic, 0))

_S_initialize_once();

}

In _S_initialize(), we first go through a wrapper for pthread_once(): the first thread that reaches this code consumes _S_once and calls _S_initialize_once(), whereas other threads (if any) are stuck waiting for _S_initialize_once() to complete.

This looks rather fail-proof, right?

There is even an extra direct call to _S_initialize_once() if _S_classic is still uninitialized after that.

Now, _S_initialize_once() itself is rather straightforward: it allocates _S_classic and puts it within c_locale.

void

locale::_S_initialize_once() throw()

{

// Need to check this because we could get called once from _S_initialize()

// when the program is single-threaded, and then again (via __gthread_once)

// when it's multi-threaded.

if (_S_classic)

return;

// 2 references.

// One reference for _S_classic, one for _S_global

_S_classic = new (&c_locale_impl) _Impl(2);

_S_global = _S_classic;

new (&c_locale) locale(_S_classic);

}

The crash looks as if we never went through _S_initialize_once(), so let’s put a breakpoint there and see what happens.

And just by doing this, we already notice something suspicious.

We do reach _S_initialize_once(), but not within the firefox binary: instead, we only ever reach the version exported by liblgpllibs.so.

In fact, liblgpllibs.so is also statically linked with libstdc++, such that firefox and liblgpllibs.so both embed and export their own _S_initialize_once() function.

By default, symbol interposition applies, and _S_initialize_once() should always be called through the procedure linkage table (PLT), so that every module ends up calling the same version of the function.

If symbol interposition were happening here, we would expect that liblgpllibs.so would reach the version of _S_initialize_once() exported by firefox rather than its own, because firefox was loaded first.

So maybe there is no symbol interposition.

This can occur when using -fno-semantic-interposition.

Each version of the standard library would live on its own, independent from the other versions.

But neither the Firefox build system nor the Ubuntu maintainer seem to pass this flag to the compiler.

However, by looking at the disassembly for _S_initialize() and _S_initialize_once(), we can see that the exported global variables (_S_once, _S_classic, _S_global) are subject to symbol interposition:

These accesses all go through the global offset table (GOT), so that every module ends up accessing the same version of the variable.

This seems strange given what we said earlier about _S_initialize_once().

Non-exported global variables (c_locale, c_locale_impl), however, are accessed directly without symbol interposition, as expected.

We now have enough information to explain the crash.

When we reach _S_initialize() in liblgpllibs.so, we actually consume the _S_once that lives in firefox, and initialize the _S_classic and _S_global that live in firefox.

But we initialize them with pointers to well initialized variables c_locale_impl and c_locale that live in liblgpllibs.so!

The variables c_locale_impl and c_locale that live in firefox, however, remain uninitialized.

So if we later reach _S_initialize() in firefox, everything looks as if initialization has happened.

But then we return a reference to the version of c_locale that lives in firefox, and this version has never been initialized.

Boom!

Now the main question is: why do we see interposition occur for _S_once but not for _S_initialize_once()?

If we step back for a minute, there is a fundamental distinction between these symbols: one is a function symbol, the other is a variable symbol.

And indeed, the Firefox build system uses the -Bsymbolic-function flag!

The ld man page describes it as follows:

-Bsymbolic-functions When creating a shared library, bind references to global function symbols to the definition within the shared library, if any. This option is only meaningful on ELF platforms which support shared libraries.

As opposed to:

-Bsymbolic When creating a shared library, bind references to global symbols to the definition within the shared library, if any. Normally, it is possible for a program linked against a shared library to override the definition within the shared library. This option is only meaningful on ELF platforms which support shared libraries.

Nailed it!

The crash occurs because this flag makes us use a weird variant of symbol interposition, where symbol interposition happens for variable symbols like _S_once and _S_classic but not for function symbols like _S_initialize_once().

This results in a mismatch regarding how we access global variables: exported global variables are unique thanks to interposition, whereas every non-interposed function will access its own version of any non-exported global variable.

With all the knowledge that we have now gathered, it is easy to write a reproducer that does not involve any Firefox code:

/* main.cc */

#include <iostream>

extern void pain();

int main() {

pain();

std::cout << "[main] " << std::locale::classic().name() <<"\n";

return 0;

}

/* pain.cc */

#include <iostream>

void pain() {

std::cout << "[pain] " << std::locale::classic().name() <<"\n";

}

# Makefile

all:

$(CXX) pain.cc -fPIC -shared -o libpain.so -static-libstdc++ -Wl,-Bsymbolic-functions

$(CXX) main.cc -fPIC -c -o main.o

$(CC) main.o -fPIC -o main /usr/lib/gcc/x86_64-redhat-linux/13/libstdc++.a -L. -Wl,-rpath=. -lpain -Wl,-Bsymbolic-functions

./main

clean:

$(RM) libpain.so main

Understanding the bug is one step, and solving it is yet another story.

Should it be considered a libstdc++ bug that the code for locales is not compatible with -static-stdlibc++ -Bsymbolic-functions?

It feels like combining these flags is a very nice way to dig our own grave, and that seems to be the opinion of the libstdc++ maintainers indeed.

Overall, perhaps the strangest part of this story is that this combination did not cause any trouble up until now.

Therefore, we suggested to the maintainer of the package to stop using -static-libstdc++.

There are other ways to use a different libstdc++ than available on the system, such as using dynamic linking and setting an RPATH to link with a bundled version.

Doing that allowed them to successfully deploy a fixed version of the package.

A few days after that, with the official release of Firefox 120, we noticed a very significant bump in volume for the same crash signature. Not again!

This time the volume was coming exclusively from users of NixOS 23.05, and it was huge!

After we shared the conclusions from our beta investigation with them, the maintainers of NixOS were able to quickly associate the crash with an issue that had not yet been backported for 23.05 and was causing the compiler to behave like -static-libstdc++.

To avoid such mess in the future, we added detection for this particular setup in Firefox’s configure.

We are grateful to the people who have helped fix this issue, in particular:

- Rico Tzschichholz (ricotz) who quickly fixed the Ubuntu 18.04 LTS package, and Amin Bandali (bandali) who provided help on the way;